ENTIRE: Learning-based Volume Rendering Time Prediction

Jan 1, 2025·

,

,

·

1 min read

,

,

·

1 min read

Zikai Yin

Hamid Gadirov

Jiri Kosinka

Steffen Frey

Abstract

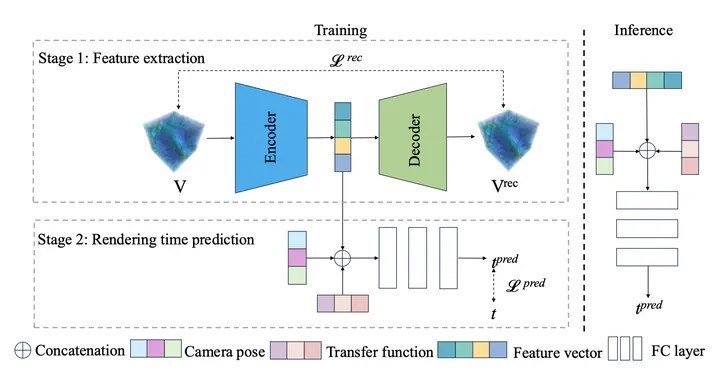

In this paper, we present ENTIRE, a novel approach for predicting volume rendering time quickly and accurately. This is a challenging problem as timings are influenced by various factors including the content of the volume data, the camera configuration, and the transfer function. In our deep learning-based approach, we first extract a feature vector from a volume that captures its structure that is relevant for rendering time performance. Then we combine this feature vector with further relevant rendering parameters (e.g. camera setup and transfer function setting), and with this perform the final prediction. Our experiments conducted with different rendering codes (CPU- / GPU-based) and variants (no / single scattering) and on various datasets demonstrate that our model is capable of efficiently achieving high prediction accuracy with fast response rates. We showcase ENTIRE’s capability of enabling dynamic pa- rameter adaptation for stable frame rates and load balancing in two case studies.

Type